New unified platform combines AI voice agent automation with Real-time agent assistance and Auto QA, enabling healthcare payers to reduce average handle time (AHT) and improve first contact resolution (FCR) in their call centers.

IRVING, Texas and SAN FRANCISCO, Jan. 7, 2026 /PRNewswire-PRWeb/ -- Voicegain, a leader in AI Voice Agents and Infrastructure, today announced the acquisition of TrampolineAI, a venture-backed healthcare payer-focused Contact Center AI company whose products supports thousands of member interactions. The acquisition unifies Voicegain's AI Voice Agent automation with Trampoline's real-time agent assistance and Auto QA capabilities, enabling healthcare payers to optimize their entire contact center operation—from fully automated interactions to AI-enhanced human agent support.

Healthcare payer contact centers face mounting pressure to reduce costs while improving member experience. The reasons vary from CMS pressure, Medicaid redeterminations, Medicare AEP volume and staffing shortages. The challenge lies in balancing automation for routine inquiries with personalized support for complex interactions. The combined Voicegain and TrampolineAI platform addresses this challenge by providing a comprehensive solution that spans the full spectrum of contact center needs—automating high-volume routine calls while empowering human agents with real-time intelligence for interactions that require specialized attention.

"We're seeing strong demand from healthcare payers for a production-ready Voice AI platform. TrampolineAI brings deep payer contact center expertise and deployments at scale, accelerating our mission at Voicegain." — Arun Santhebennur

Over the past two years, Voicegain has scaled Casey, an AI Voice Agent purpose-built for health plans, TPAs, utilization management, and other healthcare payer businesses. Casey answers and triages member and provider calls in health insurance payer call centers. After performing HIPAA validation, Casey automates routine caller intents related to claims, eligibility, coverage/benefits, and prior authorization. For calls requiring live assistance, Casey transfers the interaction context via screen pop to human agents.

TrampolineAI has developed a payer-focused Generative AI suite of contact center products—Assist, Analyze, and Auto QA—designed to enhance human agent efficiency and effectiveness. The platform analyzes conversations between members and agents in real-time, leveraging real-time transcription and Gen AI models. It provides real-time answers by scanning plan documents such as Summary of Benefits and Coverage (SBCs) and Summary Plan Descriptions (SPDs), fills agent checklists automatically, and generates payer-optimized interaction summaries. Since its founding, TrampolineAI has established deployments with leading TPAs and health plans, processing hundreds of thousands of member interactions.

"Our mission at Voicegain is to enable businesses to deploy private, mission-critical Voice AI at scale," said Arun Santhebennur, Co-founder and CEO of Voicegain. "As we enter 2026, we are seeing strong demand from healthcare payers for a comprehensive, production-ready Voice AI platform. The TrampolineAI team brings deep expertise in healthcare payer operations and contact center technology, and their solutions are already deployed at scale across multiple payer environments."

Through this acquisition, Voicegain expands the Casey platform with purpose-built capabilities for payer contact centers, including AI-assisted agent workflows, real-time sentiment analysis, and automated quality monitoring. TrampolineAI customers gain access to Voicegain's AI Voice Agents, enterprise-grade Voice AI infrastructure including real-time and batch transcription, and large-scale deployment capabilities, while continuing to receive uninterrupted service.

"We founded TrampolineAI to address the significant administrative cost challenges healthcare payers face by deploying Generative Voice AI in production environments at scale," said Mike Bourke, Founder and CEO of TrampolineAI. "Joining Voicegain allows us to accelerate that mission with their enterprise-grade infrastructure, engineering capabilities, and established customer base in the healthcare payer market. Together, we can deliver a truly comprehensive solution that serves the full range of contact center needs."

A TPA deploying TrampolineAI noted the platform's immediate impact, stating that the data and insights surfaced by the application were fantastic, allowing the organization to see trends and issues immediately across all incoming calls.

The combined platform positions Voicegain to deliver a complete contact center solution spanning IVA call automation, real-time transcription and agent assist, Medicare and Medicaid compliant automated QA, and next-generation analytics with native LLM analysis capabilities. Integration work is already in progress, and customers will begin seeing benefits of the combined platform in Q1 2026.

Following the acquisition, TrampolineAI founding team members Mike Bourke and Jason Fama have joined Voicegain's Advisory Board, where they will provide strategic guidance on product development and AI innovation for healthcare payer applications.

The terms of the acquisition were not disclosed.

About Voicegain

Voicegain offers healthcare payer-focused AI Voice Agents and a private Voice AI platform that enables enterprises to build, deploy, and scale voice-driven applications. Voicegain Casey is designed specifically for healthcare payers, supporting automated and assisted customer service interactions with enterprise-grade security, scalability, and compliance. For more information, visit voicegain.ai.

About TrampolineAI

TrampolineAI was a venture-backed voice AI company focused on healthcare payer solutions. The company applies Generative Voice AI to contact centers to improve operational efficiency, member experience, and compliance through real-time agent assist, sentiment analysis, and automated quality assurance technologies. For more information, visit trampolineai.com.

Media Contact:

Arun Santhebennur

Co-founder & CEO, Voicegain

Media Contact

Arun Santhebennur, Voicegain, 1 9725180863 701, arun@voicegain.ai, https://www.voicegain.ai

SOURCE Voicegain

Updated: Feb 28 2022

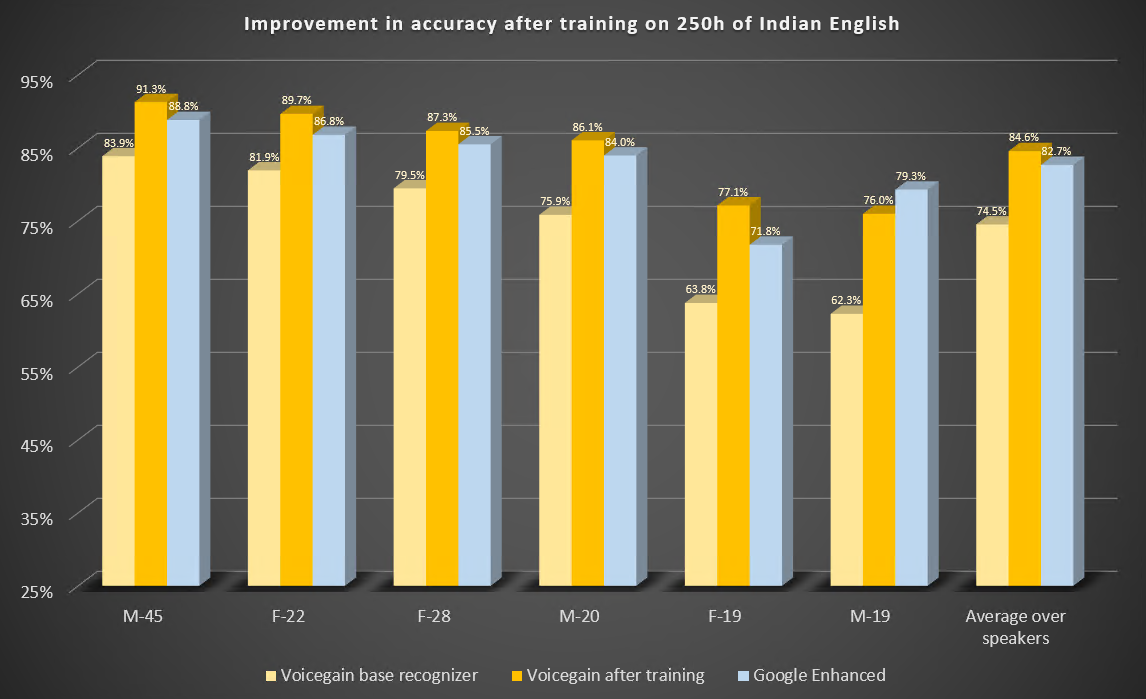

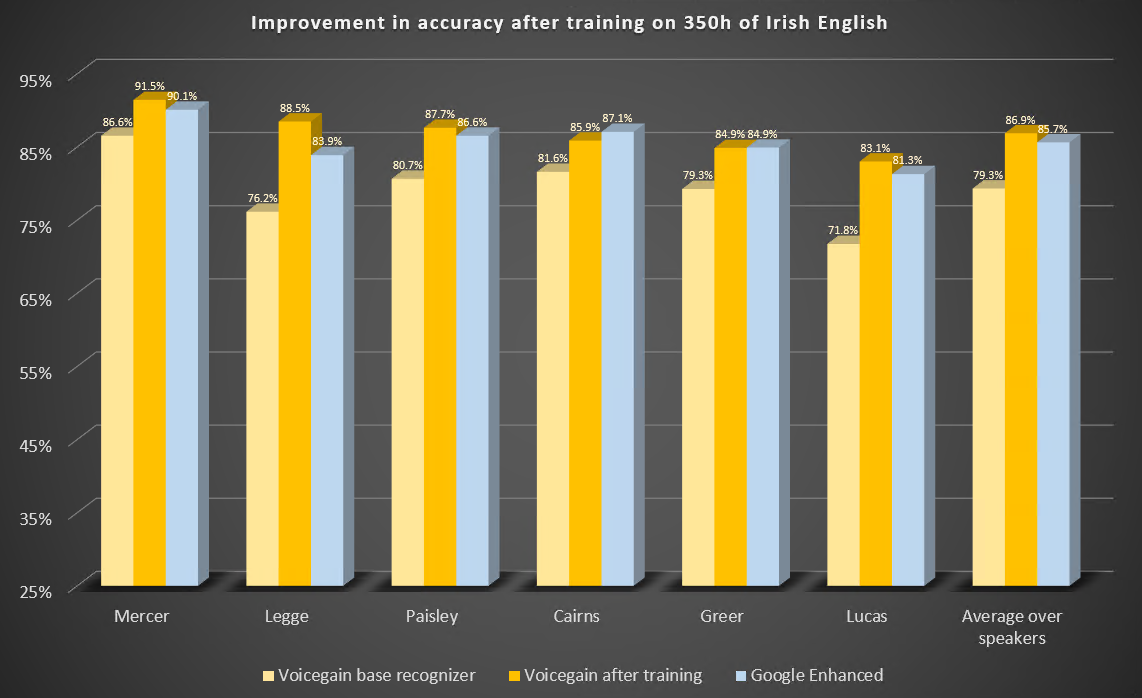

In this blog post we describe two case studies to illustrate improvements in speech-to-text or ASR recognition accuracy that can be expected from training of the underlying acoustic models. We trained our acoustic model to recognize Indian and Irish English better.

The Voicegain out-of-the-box Acoustic Model which is available as default on the Voicegain Platform had been trained to recognize mainly US English although our training data set did contain some British English audio. The training data did not contain Indian and Irish English, except for maybe accidental occurrences.

Both case studies were performed in an identical manner:

Here are the parameters of this study.

Here are the results of the benchmark before and after training. For comparison. we also include results from Google Enhanced Speech-to-Text.

Some observations:

Here are the parameters of this study.

Here are the results of the benchmark before and after training. We also include results from Google Enhanced Speech-to-Text.

Some observations:

We have published 2 additional studies showing the benefits of Acoustic Model training:

1. Click here for instructions to access our live demo site.

2. If you are building a cool voice app and you are looking to test our APIs, click hereto sign up for a developer account and receive $50 in free credits

3. If you want to take Voicegain as your own AI Transcription Assistant to meetings, click here.

In our previous post we described how Voicegain is providing grammar-based speech recognition to Twilio Programmable Voice platform via the Twilio Media Stream Feature.

Starting from release 1.16.0 of Voicegain Platform and API it possible to use Voicegain speech-to-text for speech transcription (without grammars) to achieve functionality like using TwiML <Gather>.

The reasons we think it will be attractive to Twilio users are:

Using Voicegain as an alternative to <Gather> will have similar steps to using Voicegain for grammar-based recognition - these are listed below.

This is done by invoking Voicegain async transcribe API: /asr/transcribe/async

Below is an example of the payload needed to start a new transcription session:

Some notes about the content of the request:

This request, if successful, will return the websocket url in the audio.stream.websocketUrl field. This value will be used in making a TwiML request.

Note, in the transcribe mode DTMF detection is currently not possible. Please let us know if this is something that would be critical to your use case.

After we have initiated a Voicegain ASR session, we can tell Twilio to open Media Streams connection to Voicegain. This is done by means of the following TwiML request:

Some notes about the content of the TwiML request:

Below is an example response from the transcription in case where "content" : {"full" : ["transcript"] } .

We want to share a short video showing live transcription in action at CBC. This one is using our baseline Acoustic Model. No customizations were made, no hints used. This video gives an idea of what latency is achievable with real-time transcription.

Automated real-time transcription is a great solution for accommodating hearing impaired if no sign-language interpreter is available. I can be used, e.g., at churches to transcribe sermons, at conventions and meetings to transcribe talks, at educational institutions (schools, universities) to live transcribe lessons and lectures, etc.

Voicegain Platform provides a complete stack to support live transcription:

Very high accuracy - above that provided by Google, Amazon, and Microsoft Cloud speech-to-text - can be achieved through Acoustic Model customization.

Voicegain adds grammar-based speech recognition to Twilio Programmable Voice platform via the Twilio Media Stream Feature.

The difference between Voicegain speech recognition and Twilio TwiML <Gather> is:

When using Voicegain with Twilio, your application logic will need to handle callback requests from both Twilio and Voicegain.

Each recognition will involve two main steps described below:

This is done by invoking Voicegain async recognition API: /asr/recognize/async

Below is an example of the payload needed to start a new recognition session:

Some notes about the content of the request:

This request, if successful, will return the websocket url in the audio.stream.websocketUrl field. This value will be used in making a TwiML request.

Note, if the grammar is specified to recognize DTMF, the Voicegain recognizer will recognize DTMF signals included in the audio sent from Twilio Platform.

After we have initiated a Voicegain ASR session, we can tell Twilio to open Media Streams connection to Voicegain. This is done by means of the following TwiML request:

Some notes about the content of the TwiML request:

Below is an example response from the recognition. This response is from built-in phone grammar.

Some of the feedback that we received regarding the previously published benchmark data, see here and here, was concerning the fact that the Jason Kincaid data set contained some audio that produced terrible WER across all recognizers and in practice no one would user automated speech recognition on such files. That is true. In our opinion, there are very few use cases where WER worse than 20%, i.e. where on average 1 in every 5 words is recognized incorrectly, is acceptable.

What we have done for this blog post is we have removed from the reported set those benchmark files for which none on the recognizers tested could deliver WER 20% or less. This criterion resulted in removal of 10 files - 9 from the Jason Kincaid set of 44 and 1 file from the rev.ai set of 20. The files removed fall into 3 categories:

As you can see, Voicegain and Amazon recognizers are very evenly matched with average WER differing only by 0.02%, the same holds for Google Enhanced and Microsoft recognizer with the WER difference being only 0.04%. The WER of Google Standard is about twice of the other recognizers.

[UPDATE - October 31st, 2021: Current benchmark results from end October 2021 are available here. In the most recent benchmark Voicegain performs better than Google Enhanced. Our pricing is now 0.95 cents/minute]

[UPDATE: For results reported using slightly different methodology see our new blog post.]

This is a continuation of the blog post from June where we reported the previous speech-to-text accuracy results. We encourage you to read it first, as it sets up a context to better understand the significance of benchmarking for speech-to-text.

Apart for that background intro, the key differences from the previous post are:

Here are the results.

Less than 3 months have passed from the previous test, so it is not surprising to see no improvement on Google and Amazon recognizers.

Voicegain recognizer has how overtaken Amazon by a hair breadth in average accuracy, although Amazon median accuracy on this data set is slightly above Voicegain.

Microsrosoft recognizer has improved during this time period - on the 44 benchmark files it is now on average better than Google Enhanced (in the chart we retained ordering from the June test). The single bad outlier in Google Enhanced results does alone not account for the better average WER on the Microsoft on this data set.

Google Standard is still very bad and we will likely stop reporting on it in detail in our future comparisons.

The audio from the 20-file rev.ai test is not as challenging as some of the files in the 44-file benchmark set. Consequently the results are on average better but the ranking of the recognizers does not change.

As you can see in this chart, on this data set the Voicegain recognizer is marginally better than Amazon in. It has lower WER on 13 out of 20 test files and it beats Amazon in the mean and median values. On this data set Google Enhanced beats Microsoft.

Finally, here are the combined results for all the 64 benchmark files we tested.

On the combined benchmark Voicegain beats Amazon both in average and median WER, although the median advantage is not as big as on the 20 file rev.ai set. [Note that as of 2/10/21 Voicegain WER is now 16.46|14.26]

What we would like to point out is that when comparing Google Enhanced to Microsoft, one wins if we compare the average WER while the other has a better median WER value. This highlights that the results vary a lot depending on what specific audio file is being compared.

These results show that choosing the best recognizer for a given application should be done only after thorough testing. Performance of the recognizers varies a lot depending on the audio data and acoustic environment. Moreover, the prices vary significantly. We encourage you to try the Voicegain Speech-to-Text engine for your application. It might be a better fit for your application. Even if the accuracy is a couple of points behind the two top players, you might still want to consider Voicegain because:

Donec sagittis sagittis ex, nec consequat sapien fermentum ut. Sed eget varius mauris. Etiam sed mi erat. Duis at porta metus, ac luctus neque.

Read more →Donec sagittis sagittis ex, nec consequat sapien fermentum ut. Sed eget varius mauris. Etiam sed mi erat. Duis at porta metus, ac luctus neque.

Read more →Donec sagittis sagittis ex, nec consequat sapien fermentum ut. Sed eget varius mauris. Etiam sed mi erat. Duis at porta metus, ac luctus neque.

Read more →Donec sagittis sagittis ex, nec consequat sapien fermentum ut. Sed eget varius mauris. Etiam sed mi erat. Duis at porta metus, ac luctus neque.

Read more →Donec sagittis sagittis ex, nec consequat sapien fermentum ut. Sed eget varius mauris. Etiam sed mi erat. Duis at porta metus, ac luctus neque.

Read more →Donec sagittis sagittis ex, nec consequat sapien fermentum ut. Sed eget varius mauris. Etiam sed mi erat. Duis at porta metus, ac luctus neque.

Read more →Interested in customizing the ASR or deploying Voicegain on your infrastructure?